Automatidata Project Lab

Table of Contents

Foundations of Data Science - This article is part of a series.

Automatidata project #

Course 2 - Get Started with Python

Course 2 end-of-course project: Inspect and analyze data #

In this activity, you will examine data provided and prepare it for analysis.

The purpose of this project is to investigate and understand the data provided.

The goal is to use a dataframe contructed within Python, perform a cursory inspection of the provided dataset and inform team members of your findings.

This activity has three parts:

Part 1: Understand the situation

- How can you best prepare to understand and organize the provided taxi cab information?

Part 2: Understand the data

Create a pandas dataframe for data learning, and future exploratory data analysis (EDA) and statistical activities.

View and interpret the datasets data table

Sort and interpret the data table for two variables of your choice.

Part 3: Understand the variables

What is the min, mean, and max of your chosen variables?

Visualize the variables

Follow the instructions and answer the following questions to complete the activity. Then, you will complete an Executive Summary using the questions listed on the PACE Strategy Document.

Be sure to complete this activity before moving on. The next course item will provide you with a completed exemplar to compare to your own work.

Identify data types and relevant variables using Python #

Exercise Instructions: #

Complete the following step-by-step instructions to inspect and analyze this NYC taxi dataset.

This activity will help ensure the information is:

Ready to answer questions and yield insights

Ready for visualizations

Ready for future hypothesis testing and statistical methods

Follow the instructions and answer questions to complete this activity. Afterward,

Write a short Executive Summary using your findings.

Use the structured notebook provided to help you in this project. Please complete the questions inside and prepare a summary for the data team.

Consider the questions presented in the Course 2 PACE strategy document.

Compare your data insights with the provided exemplar to confirm of your approach and results.

Throughout these project notebooks, you’ll see references to the problem-solving framework PACE. The following notebook components are labeled with the respective PACE stage: Plan, Analyze, Construct, and Execute.

PACE: Plan #

Step 1a. Understand the situation #

- How can you best prepare to understand and organize the provided taxi cab information?

Begin by exploring your dataset and consider reviewing the Data Dictionary.

### **==> EXEMPLAR CODE and OUTPUT**

#

# Question 1: How best to prepare?

#

# Q1 Answer:

# By reading the taxi cab data fields and ensuring I understand how

# each one impacts the dataset. Reviewing the fact sheet could also

# provide helpful background information.

# However, my primary goal is to get the data into Python, inspect it,

# and provide DeShawn with my initial observations.

# Afterwards, I can learn more deeply about the data and check

# for any anomalies.

Step 1b. Import dataframe #

Create a pandas dataframe for data learning, and future exploratory data analysis (EDA) and statistical activities.

Code the following,

import pandas as pd #library exercise for buidling dataframes

import numpy as np #numpy is imported with pandas

import matplotlib.pyplot as plt #visualization library

import seaborn as sns #visualization library

df = pd.read_csv(‘2017_Yellow_Taxi_Trip_Data.csv’)

Note: pair the data object name “df” with pandas functions to manipulate data, such as df.groupby().

# ==> EXEMPLAR CODE and OUTPUT

import pandas as pd #library exercise for buidling dataframes

import numpy as np #numpy is imported with pandas

import matplotlib.pyplot as plt #visualization library

import seaborn as sns #visualization library

df = pd.read_csv('2017_Yellow_Taxi_Trip_Data.csv')

print("done")

done

PACE: Analyze #

Step 2a. Understand the data - Interpret the data table #

View and interpret the datasets data table by coding the following:

- df.head(10)

- df.info()

Consider the following two questions:

Question 1: When reviewing the df.head(10) output, are there any data points that surprise you or are not correct?

Question 2: When reviewing the df.info() output, what kind of data types are we working with?

# ==> EXEMPLAR CODE and OUTPUT

df.head(10)

| Unnamed: 0 | VendorID | tpep_pickup_datetime | tpep_dropoff_datetime | passenger_count | trip_distance | RatecodeID | store_and_fwd_flag | PULocationID | DOLocationID | payment_type | fare_amount | extra | mta_tax | tip_amount | tolls_amount | improvement_surcharge | total_amount | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 24870114 | 2 | 03/25/2017 8:55:43 AM | 03/25/2017 9:09:47 AM | 6 | 3.34 | 1 | N | 100 | 231 | 1 | 13.0 | 0.0 | 0.5 | 2.76 | 0.0 | 0.3 | 16.56 |

| 1 | 35634249 | 1 | 04/11/2017 2:53:28 PM | 04/11/2017 3:19:58 PM | 1 | 1.80 | 1 | N | 186 | 43 | 1 | 16.0 | 0.0 | 0.5 | 4.00 | 0.0 | 0.3 | 20.80 |

| 2 | 106203690 | 1 | 12/15/2017 7:26:56 AM | 12/15/2017 7:34:08 AM | 1 | 1.00 | 1 | N | 262 | 236 | 1 | 6.5 | 0.0 | 0.5 | 1.45 | 0.0 | 0.3 | 8.75 |

| 3 | 38942136 | 2 | 05/07/2017 1:17:59 PM | 05/07/2017 1:48:14 PM | 1 | 3.70 | 1 | N | 188 | 97 | 1 | 20.5 | 0.0 | 0.5 | 6.39 | 0.0 | 0.3 | 27.69 |

| 4 | 30841670 | 2 | 04/15/2017 11:32:20 PM | 04/15/2017 11:49:03 PM | 1 | 4.37 | 1 | N | 4 | 112 | 2 | 16.5 | 0.5 | 0.5 | 0.00 | 0.0 | 0.3 | 17.80 |

| 5 | 23345809 | 2 | 03/25/2017 8:34:11 PM | 03/25/2017 8:42:11 PM | 6 | 2.30 | 1 | N | 161 | 236 | 1 | 9.0 | 0.5 | 0.5 | 2.06 | 0.0 | 0.3 | 12.36 |

| 6 | 37660487 | 2 | 05/03/2017 7:04:09 PM | 05/03/2017 8:03:47 PM | 1 | 12.83 | 1 | N | 79 | 241 | 1 | 47.5 | 1.0 | 0.5 | 9.86 | 0.0 | 0.3 | 59.16 |

| 7 | 69059411 | 2 | 08/15/2017 5:41:06 PM | 08/15/2017 6:03:05 PM | 1 | 2.98 | 1 | N | 237 | 114 | 1 | 16.0 | 1.0 | 0.5 | 1.78 | 0.0 | 0.3 | 19.58 |

| 8 | 8433159 | 2 | 02/04/2017 4:17:07 PM | 02/04/2017 4:29:14 PM | 1 | 1.20 | 1 | N | 234 | 249 | 2 | 9.0 | 0.0 | 0.5 | 0.00 | 0.0 | 0.3 | 9.80 |

| 9 | 95294817 | 1 | 11/10/2017 3:20:29 PM | 11/10/2017 3:40:55 PM | 1 | 1.60 | 1 | N | 239 | 237 | 1 | 13.0 | 0.0 | 0.5 | 2.75 | 0.0 | 0.3 | 16.55 |

# ==> EXEMPLAR CODE and OUTPUT

df.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 22699 entries, 0 to 22698

Data columns (total 18 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Unnamed: 0 22699 non-null int64

1 VendorID 22699 non-null int64

2 tpep_pickup_datetime 22699 non-null object

3 tpep_dropoff_datetime 22699 non-null object

4 passenger_count 22699 non-null int64

5 trip_distance 22699 non-null float64

6 RatecodeID 22699 non-null int64

7 store_and_fwd_flag 22699 non-null object

8 PULocationID 22699 non-null int64

9 DOLocationID 22699 non-null int64

10 payment_type 22699 non-null int64

11 fare_amount 22699 non-null float64

12 extra 22699 non-null float64

13 mta_tax 22699 non-null float64

14 tip_amount 22699 non-null float64

15 tolls_amount 22699 non-null float64

16 improvement_surcharge 22699 non-null float64

17 total_amount 22699 non-null float64

dtypes: float64(8), int64(7), object(3)

memory usage: 3.1+ MB

Step 2b. Understand the data - Sort by variables #

Sort and interpret the data table for two variables of your choice.

Answer the following three questions:

Question 1: Sort your first variable (trip_distance) from maximum to minimum value, do the values seem normal?

Question 2: Sort your by your second variable (total_amount), are any values unusual?

Question 3: Are the resulting rows similar for both sorts? Why or why not?

# ==> EXEMPLAR CODE and OUTPUT

df_sort = df.sort_values(by=['trip_distance'],ascending=False)

df_sort.head(10)

| Unnamed: 0 | VendorID | tpep_pickup_datetime | tpep_dropoff_datetime | passenger_count | trip_distance | RatecodeID | store_and_fwd_flag | PULocationID | DOLocationID | payment_type | fare_amount | extra | mta_tax | tip_amount | tolls_amount | improvement_surcharge | total_amount | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 9280 | 51810714 | 2 | 06/18/2017 11:33:25 PM | 06/19/2017 12:12:38 AM | 2 | 33.96 | 5 | N | 132 | 265 | 2 | 150.00 | 0.0 | 0.0 | 0.00 | 0.00 | 0.3 | 150.30 |

| 13861 | 40523668 | 2 | 05/19/2017 8:20:21 AM | 05/19/2017 9:20:30 AM | 1 | 33.92 | 5 | N | 229 | 265 | 1 | 200.01 | 0.0 | 0.5 | 51.64 | 5.76 | 0.3 | 258.21 |

| 6064 | 49894023 | 2 | 06/13/2017 12:30:22 PM | 06/13/2017 1:37:51 PM | 1 | 32.72 | 3 | N | 138 | 1 | 1 | 107.00 | 0.0 | 0.0 | 55.50 | 16.26 | 0.3 | 179.06 |

| 10291 | 76319330 | 2 | 09/11/2017 11:41:04 AM | 09/11/2017 12:18:58 PM | 1 | 31.95 | 4 | N | 138 | 265 | 2 | 131.00 | 0.0 | 0.5 | 0.00 | 0.00 | 0.3 | 131.80 |

| 29 | 94052446 | 2 | 11/06/2017 8:30:50 PM | 11/07/2017 12:00:00 AM | 1 | 30.83 | 1 | N | 132 | 23 | 1 | 80.00 | 0.5 | 0.5 | 18.56 | 11.52 | 0.3 | 111.38 |

| 18130 | 90375786 | 1 | 10/26/2017 2:45:01 PM | 10/26/2017 4:12:49 PM | 1 | 30.50 | 1 | N | 132 | 220 | 1 | 90.50 | 0.0 | 0.5 | 19.85 | 8.16 | 0.3 | 119.31 |

| 5792 | 68023798 | 2 | 08/11/2017 2:14:01 PM | 08/11/2017 3:17:31 PM | 1 | 30.33 | 2 | N | 132 | 158 | 1 | 52.00 | 0.0 | 0.5 | 14.64 | 5.76 | 0.3 | 73.20 |

| 15350 | 77309977 | 2 | 09/14/2017 1:44:44 PM | 09/14/2017 2:34:29 PM | 1 | 28.23 | 2 | N | 13 | 132 | 1 | 52.00 | 0.0 | 0.5 | 4.40 | 5.76 | 0.3 | 62.96 |

| 10302 | 43431843 | 1 | 05/15/2017 8:11:34 AM | 05/15/2017 9:03:16 AM | 1 | 28.20 | 2 | N | 90 | 132 | 1 | 52.00 | 0.0 | 0.5 | 11.71 | 5.76 | 0.3 | 70.27 |

| 2592 | 51094874 | 2 | 06/16/2017 6:51:20 PM | 06/16/2017 7:41:42 PM | 1 | 27.97 | 2 | N | 261 | 132 | 2 | 52.00 | 4.5 | 0.5 | 0.00 | 5.76 | 0.3 | 63.06 |

# ==> EXEMPLAR CODE and OUTPUT

df_sort = df.sort_values(by=['total_amount'],ascending=False)

df_sort.head(10)

| Unnamed: 0 | VendorID | tpep_pickup_datetime | tpep_dropoff_datetime | passenger_count | trip_distance | RatecodeID | store_and_fwd_flag | PULocationID | DOLocationID | payment_type | fare_amount | extra | mta_tax | tip_amount | tolls_amount | improvement_surcharge | total_amount | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8476 | 11157412 | 1 | 02/06/2017 5:50:10 AM | 02/06/2017 5:51:08 AM | 1 | 2.60 | 5 | N | 226 | 226 | 1 | 999.99 | 0.0 | 0.0 | 200.00 | 0.00 | 0.3 | 1200.29 |

| 20312 | 107558404 | 2 | 12/19/2017 9:40:46 AM | 12/19/2017 9:40:55 AM | 2 | 0.00 | 5 | N | 265 | 265 | 2 | 450.00 | 0.0 | 0.0 | 0.00 | 0.00 | 0.3 | 450.30 |

| 13861 | 40523668 | 2 | 05/19/2017 8:20:21 AM | 05/19/2017 9:20:30 AM | 1 | 33.92 | 5 | N | 229 | 265 | 1 | 200.01 | 0.0 | 0.5 | 51.64 | 5.76 | 0.3 | 258.21 |

| 12511 | 107108848 | 2 | 12/17/2017 6:24:24 PM | 12/17/2017 6:24:42 PM | 1 | 0.00 | 5 | N | 265 | 265 | 1 | 175.00 | 0.0 | 0.0 | 46.69 | 11.75 | 0.3 | 233.74 |

| 15474 | 55538852 | 2 | 06/06/2017 8:55:01 PM | 06/06/2017 8:55:06 PM | 1 | 0.00 | 5 | N | 265 | 265 | 1 | 200.00 | 0.0 | 0.5 | 11.00 | 0.00 | 0.3 | 211.80 |

| 6064 | 49894023 | 2 | 06/13/2017 12:30:22 PM | 06/13/2017 1:37:51 PM | 1 | 32.72 | 3 | N | 138 | 1 | 1 | 107.00 | 0.0 | 0.0 | 55.50 | 16.26 | 0.3 | 179.06 |

| 16379 | 101198443 | 2 | 11/30/2017 10:41:11 AM | 11/30/2017 11:31:45 AM | 1 | 25.50 | 5 | N | 132 | 265 | 2 | 140.00 | 0.0 | 0.5 | 0.00 | 16.26 | 0.3 | 157.06 |

| 3582 | 111653084 | 1 | 01/01/2017 11:53:01 PM | 01/01/2017 11:53:42 PM | 1 | 7.30 | 5 | N | 1 | 1 | 1 | 152.00 | 0.0 | 0.0 | 0.00 | 0.00 | 0.3 | 152.30 |

| 11269 | 51920669 | 1 | 06/19/2017 12:51:17 AM | 06/19/2017 12:52:12 AM | 2 | 0.00 | 5 | N | 265 | 265 | 1 | 120.00 | 0.0 | 0.0 | 20.00 | 11.52 | 0.3 | 151.82 |

| 9280 | 51810714 | 2 | 06/18/2017 11:33:25 PM | 06/19/2017 12:12:38 AM | 2 | 33.96 | 5 | N | 132 | 265 | 2 | 150.00 | 0.0 | 0.0 | 0.00 | 0.00 | 0.3 | 150.30 |

Exemplar response: The two outputs in the table show different rows of data. A reason for this observation might be because there are trips that have a shorter distance, yet higher total amount (total ride fare).

Step 2c. Understand the data - Use df.describe() #

Instructions: Using pandas df.describe(), what is the min, mean, and max of your chosen variables?

Code and perform the following:

df.describe()

Question 1: What is the min, mean, and max of your first variable?

Question 2: What is the min, mean, and max of second variable?

Question 3: Are the values easily readable? Would could be done to make them more easily readable?

# ==> EXEMPLAR CODE and OUTPUT

df.describe()

#Question 1: what is the min, mean and max of your first variable ?

#Answer: Min Mean Max

#total_amount -120.30 16.31 1200.29

#Question 2: what is the min, mean, and max of your second variable?

#Answer: Min Mean Max

#trip_distance 0 2.91 33.96

| Unnamed: 0 | VendorID | passenger_count | trip_distance | RatecodeID | PULocationID | DOLocationID | payment_type | fare_amount | extra | mta_tax | tip_amount | tolls_amount | improvement_surcharge | total_amount | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 2.269900e+04 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 | 22699.000000 |

| mean | 5.675849e+07 | 1.556236 | 1.642319 | 2.913313 | 1.043394 | 162.412353 | 161.527997 | 1.336887 | 13.026629 | 0.333275 | 0.497445 | 1.835781 | 0.312542 | 0.299551 | 16.310502 |

| std | 3.274493e+07 | 0.496838 | 1.285231 | 3.653171 | 0.708391 | 66.633373 | 70.139691 | 0.496211 | 13.243791 | 0.463097 | 0.039465 | 2.800626 | 1.399212 | 0.015673 | 16.097295 |

| min | 1.212700e+04 | 1.000000 | 0.000000 | 0.000000 | 1.000000 | 1.000000 | 1.000000 | 1.000000 | -120.000000 | -1.000000 | -0.500000 | 0.000000 | 0.000000 | -0.300000 | -120.300000 |

| 25% | 2.852056e+07 | 1.000000 | 1.000000 | 0.990000 | 1.000000 | 114.000000 | 112.000000 | 1.000000 | 6.500000 | 0.000000 | 0.500000 | 0.000000 | 0.000000 | 0.300000 | 8.750000 |

| 50% | 5.673150e+07 | 2.000000 | 1.000000 | 1.610000 | 1.000000 | 162.000000 | 162.000000 | 1.000000 | 9.500000 | 0.000000 | 0.500000 | 1.350000 | 0.000000 | 0.300000 | 11.800000 |

| 75% | 8.537452e+07 | 2.000000 | 2.000000 | 3.060000 | 1.000000 | 233.000000 | 233.000000 | 2.000000 | 14.500000 | 0.500000 | 0.500000 | 2.450000 | 0.000000 | 0.300000 | 17.800000 |

| max | 1.134863e+08 | 2.000000 | 6.000000 | 33.960000 | 99.000000 | 265.000000 | 265.000000 | 4.000000 | 999.990000 | 4.500000 | 0.500000 | 200.000000 | 19.100000 | 0.300000 | 1200.290000 |

PACE: Construct #

Step 3a. Visualize you variables #

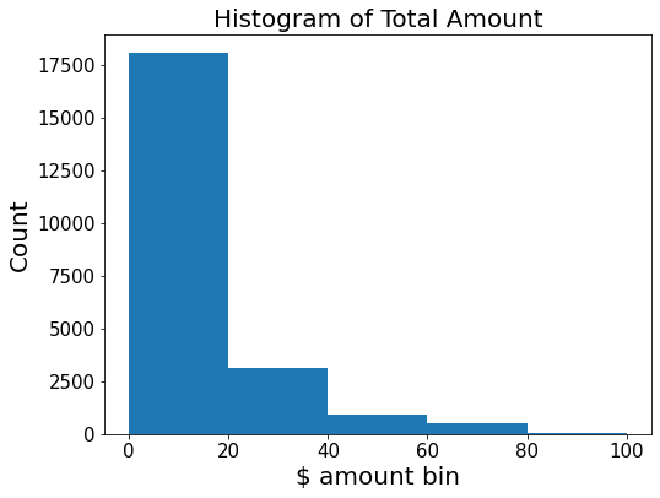

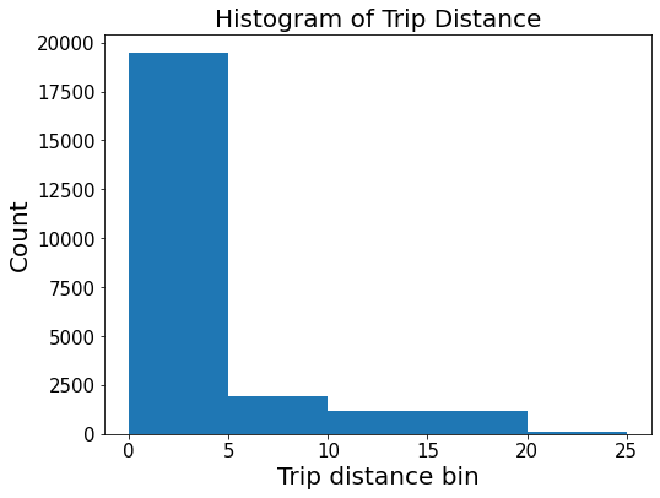

Instructions: Create a histogram for each of the two variables. Act and reflect on the following steps:

Histogram of your first variable (total_amount)

Histogram of your second variable (trip_distance)

Are your variables numerical (did the code work)?

# ==> EXEMPLAR CODE and OUTPUT

plt.figure(figsize=(8,6))

plt.xticks(fontsize=14); plt.yticks(fontsize=14)

df = df.sort_values(by='total_amount')

plt.hist(df['total_amount'],bins=[0,20,40,60,80,100])

plt.title('Histogram of Total Amount',fontsize=20)

plt.xticks(fontsize=15); plt.yticks(fontsize=15)

plt.xlabel('$ amount bin',fontsize=20)

plt.ylabel('Count', fontsize=20)

Text(0, 0.5, 'Count')

# ==> EXEMPLAR CODE and OUTPUT

plt.figure(figsize=(8,6))

#plt.xticks(fontsize=14); plt.yticks(fontsize=14)

df = df.sort_values(by='trip_distance')

plt.hist(df['trip_distance'],bins=[0,5,10,20,25])

plt.title('Histogram of Trip Distance',fontsize=20)

plt.xticks(fontsize=15); plt.yticks(fontsize=15)

plt.xlabel('Trip distance bin',fontsize=20)

plt.ylabel('Count', fontsize=20)

Text(0, 0.5, 'Count')

PACE: Execute #

Given your efforts, what can you summarize for DeShawn? #

Note for Learners: Your answer should address Luana’s request for a summary that covers the following points:

A summary of the data type of each variable

Any relevant and irrelevant columns

The minimum, mean, and max for the two most relevant variables

A summary of the data visualization